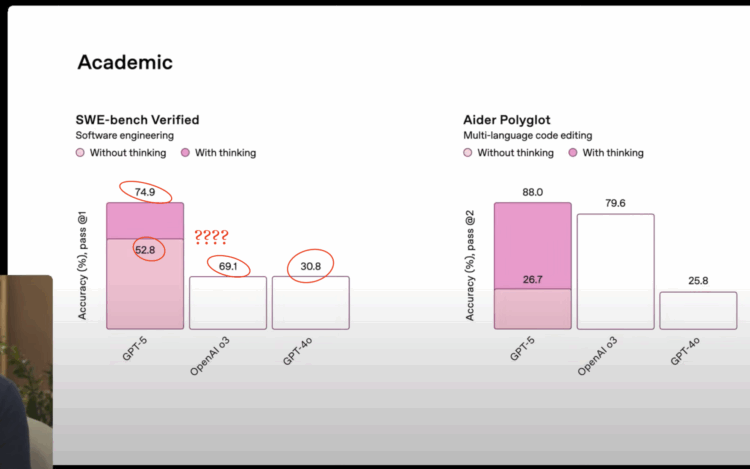

AI companies like to say that they are close to or reached a level of “intelligence” in their tools that it’s like having a PhD assistant in your pocket. Claus Wilke argues that classification is misguided.

Now, presumably AI models have the required tenacity for a PhD (as long as somebody pays for the token budget), and I just said exceptional intelligence is not required. So what’s keeping current AI models from PhD-level performance? In my opinion, it’s the ability to actually reason, to introspect and self-reflect, and to develop and update over time an accurate mental model of their research topic. And most importantly, since PhD-level research occurs at the edge of human knowledge, it’s the ability to deal with a situation and set of facts that few people have encountered or written about.

In practical terms, I’m pretty sure most people do not want a PhD-level assistant. They want immediate answers. They want a diligent intern.

A PhD assistant is going to answer your question with more questions, and then five to seven years later, you will finally get an “answer” that might be correct but you won’t know for sure because there will be a lot of uncertainty attached. However, the good news is that you might be able to explore more with further research and future directions. You will have to do that on your own though or find another PhD assistant, because the original assistant has since moved on to a different interest.

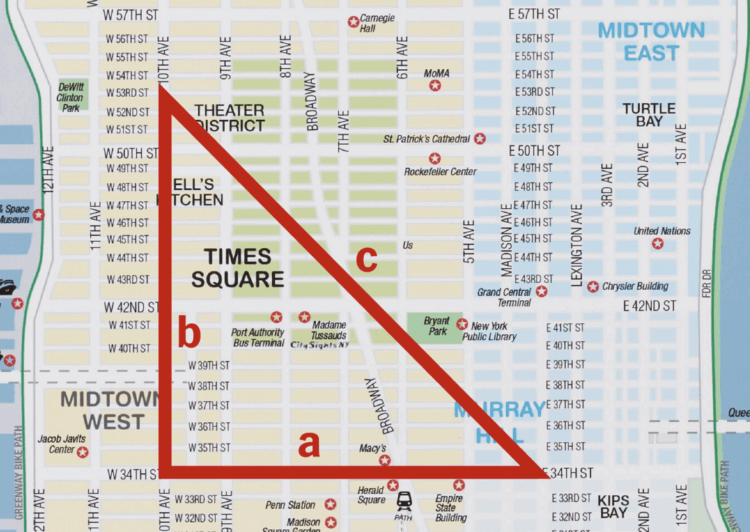

Visualize This: The FlowingData Guide to Design, Visualization, and Statistics (2nd Edition)

Visualize This: The FlowingData Guide to Design, Visualization, and Statistics (2nd Edition)