A Tesla Model S, while on “Autopilot”, crashed into a parked truck, killing one and injuring another. Tesla claimed in a lawsuit that the data about the crash could not be found. So lawyers for the plaintiffs tried a different route. For the Washington Post, Trisha Thadani and Faiz Siddiqui report:

That’s when they turned to hacker greentheonly, who had a robust social media following for his work recovering data from damaged Teslas and posting his findings on X.

[…]

Inside a Starbucks near the Miami airport, the plaintiffs’ attorneys watched as greentheonly fired up his ThinkPad computer and plugged in a flash drive containing a forensic copy of the Autopilot unit’s contents. Within minutes, he found key data that was marked for deletion — along with confirmation that Tesla had received the collision snapshot within moments of the crash — proving the critical information should have actually been accessible all along.

Even if the data were corrupted or lost initially, which it seems like it wasn’t, Tesla should know their systems well enough to access the data in different ways. One would hope at least, for a company pushing for ubiquitous self-driving cars. Either that or Tesla should hire greentheonly to fix all their systems.

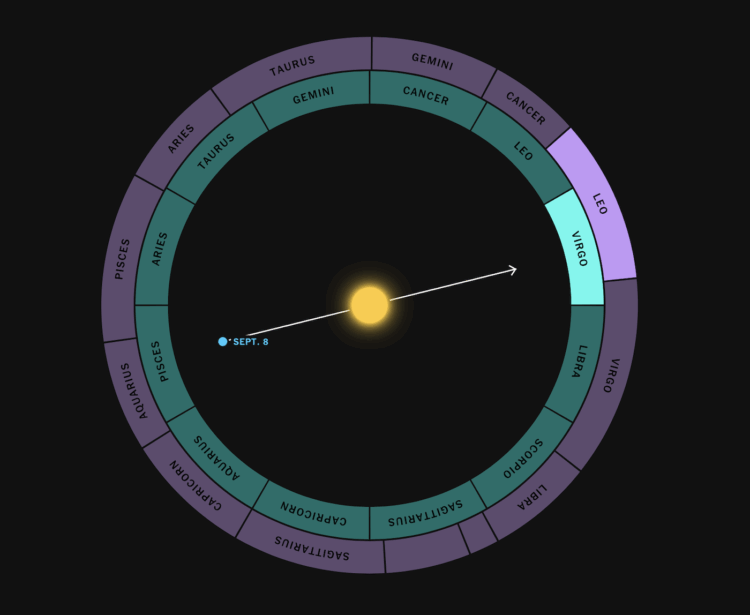

Visualize This: The FlowingData Guide to Design, Visualization, and Statistics (2nd Edition)

Visualize This: The FlowingData Guide to Design, Visualization, and Statistics (2nd Edition)