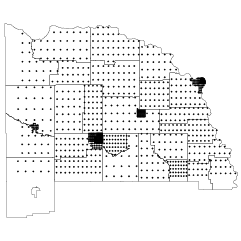

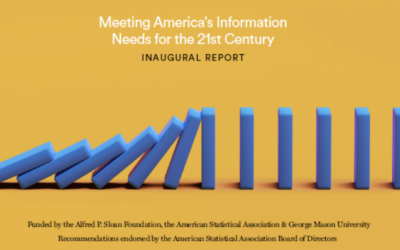

It continues to grow more difficult for federal statistical agencies to accurately measure…

Statistics

More than mean, median, and mode.

-

Federal data at risk

-

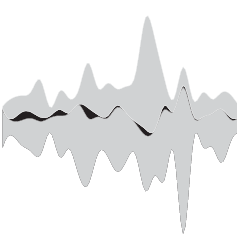

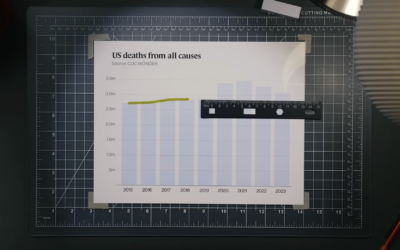

Estimating Covid deaths

It’s impossible to know the exact number of Covid deaths worldwide, because consistent…

-

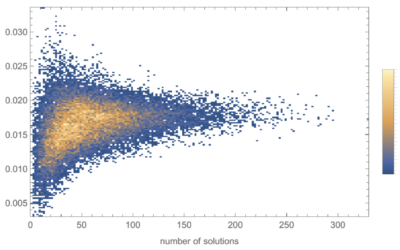

Searching for the hardest and easiest Spelling Bee puzzels

Christopher Wolfram explains his “unnecessarily detailed analysis” of the spelling game from the…

-

AI-generated email from a friend

Neven Mrgan describes what it was like to get an AI-generated email from…

-

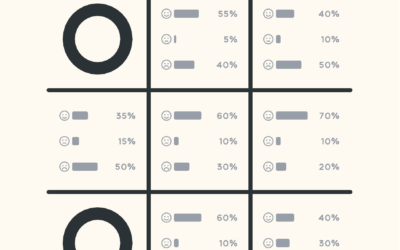

Probabilistic Tic-Tac-Toe

Thinking about life and randomness, Cameron Sun modified the classic game of Tic-Tac-Toe.…

-

Wheel of Fortune analysis for the win

A Wheel of Fortune contestant employed strategies outlined in a NYT Upshot analysis…

-

WildChat, a dataset of ChatGPT interactions

In case you need a large dataset to train your chatbot — and…

-

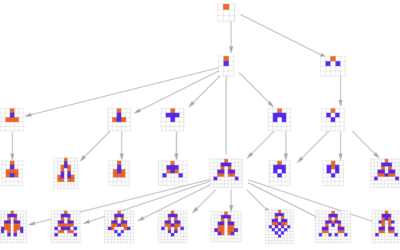

Mathematical model for biological evolution and machine learning

Stephen Wolfram gets into modeling biological evolution:

Why does biological evolution work? And,… -

Regulating deepfakes

It continues to get easier to take someone’s face and put that person…

-

OpenAI previews voice synthesis

OpenAI previewed Voice Engine, a model to generate voices that mimic, using just…

-

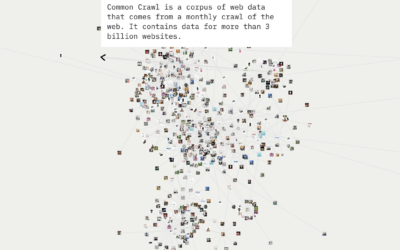

Examining the dataset driving machine learning systems

For Knowing Machines, an ongoing research project that examines the innards of machine…

-

National identity stereotypes through generative AI

For Rest of World, Victoria Turk breaks down bias in generative AI in…

-

Flipbook Experiment, like the Telephone game but visual

This looks fun. The Pudding is running an experiment that functions like a…

-

Language-based AI to chat with her dead husband

For the past few years, Laurie Anderson has been using an AI chatbot…

-

Love: math or magic?

This American Life tells the tales as old as time:

When it comes… -

DNA face to facial recognition in attempt to find suspect

In an effort to find a suspect in a 1990 murder, there was…

-

Collection of NBA basketball data sources and apps

If you’re into basketball data, Sravan Pannala is keeping a running list of…

-

Coin flips might tend towards the same side they started

The classic coin flip is treated as a fair way to make decisions,…

-

AI-based things in 2023

There were many AI-based things in 2023. Simon Willison outlined what we learned…

-

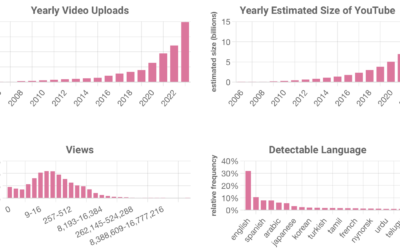

Estimating the size of YouTube

YouTube doesn’t offer numbers for how big they are, so Ethan Zuckerman and…

Visualize This: The FlowingData Guide to Design, Visualization, and Statistics (2nd Edition)

Visualize This: The FlowingData Guide to Design, Visualization, and Statistics (2nd Edition)