Testing citation skills and overconfidence of AI chatbots

When you enter a query in traditional search engines, you get a list of results. They are possible answers to your question, and you decide what resources you want to trust. On the other hand, when you query via AI chatbot, you get a limited number of answers, as a sentence, that appear confident in the context.

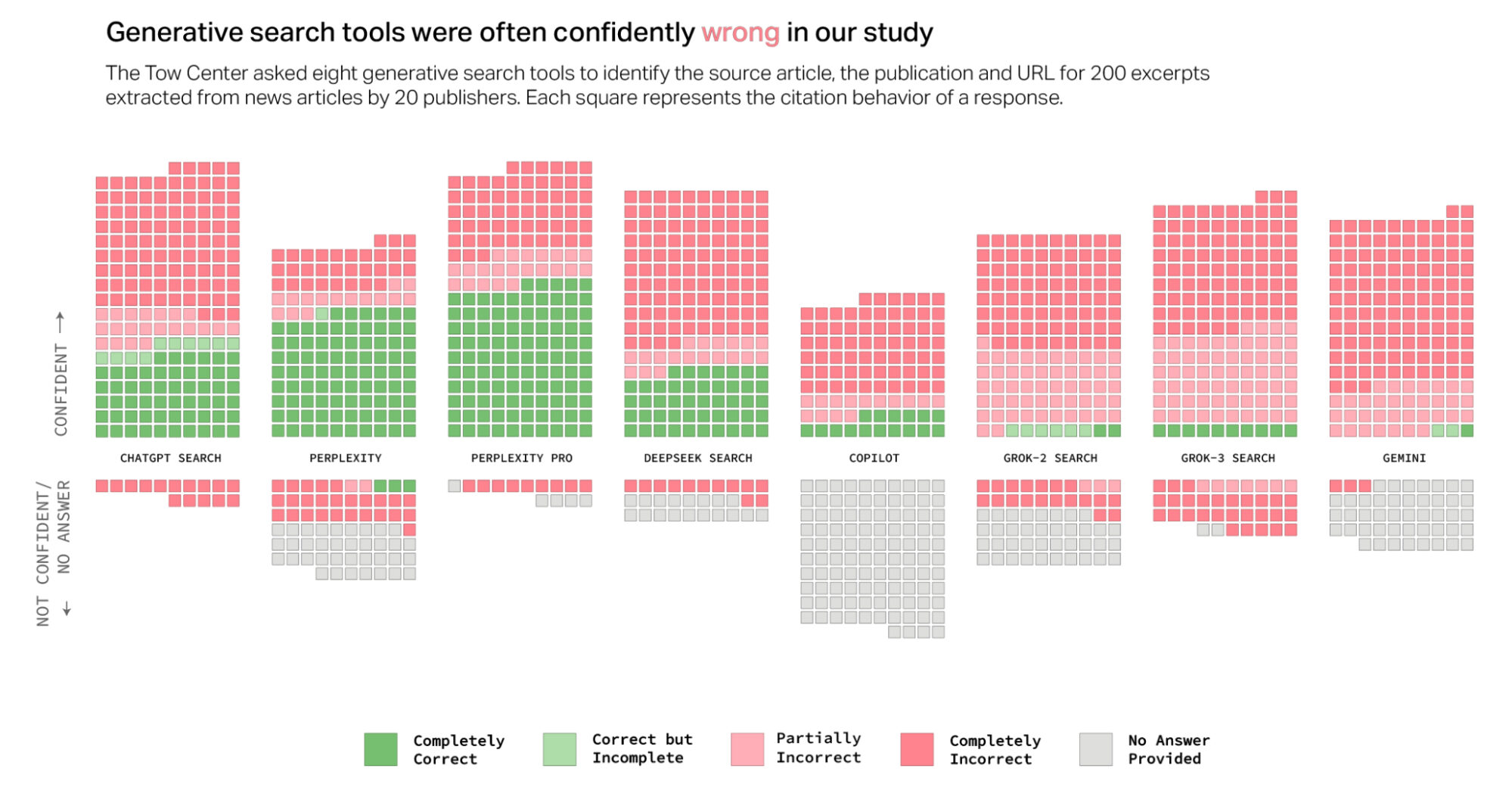

For Columbia Journalism Review, Klaudia Jaźwińska and Aisvarya Chandrasekar tested this accuracy and confidence by using several chatbots to cite articles:

Overall, the chatbots often failed to retrieve the correct articles. Collectively, they provided incorrect answers to more than 60 percent of queries. Across different platforms, the level of inaccuracy varied, with Perplexity answering 37 percent of the queries incorrectly, while Grok 3 had a much higher error rate, answering 94 percent of the queries incorrectly.

So not great.

I am sure someone is working on improving that accuracy, but we’ll have to develop our own skills in separating truth from junk, just like we have with past online things. Going forward, maybe keep an eye out for the younger and older generations who tend to accept online things as automatic truth. Things could get dicey.

Become a member. Support an independent site. Get extra visualization goodness.

See What You Get