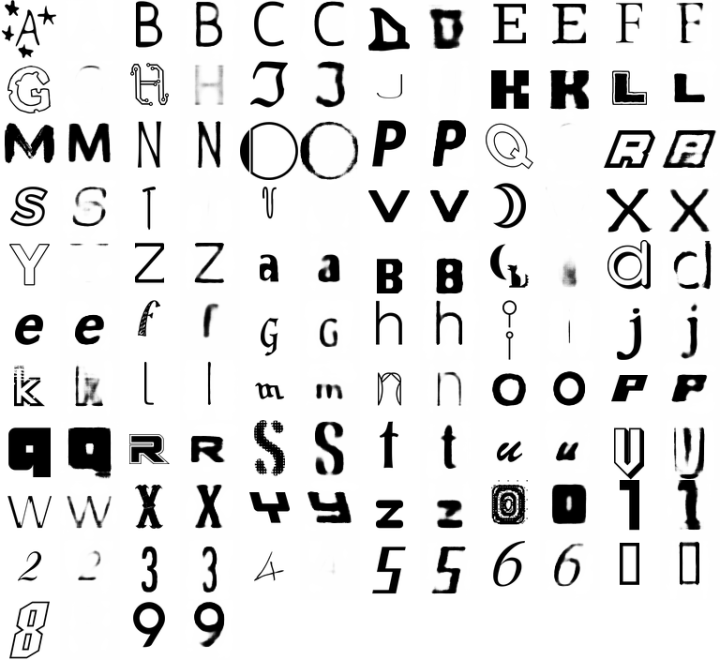

Erik Bernhardsson downloaded 50,000 fonts and then threw them to the neural networks to see what sort of letters a model might come up with.

These are all characters drawn from the test set, so the network hasn’t seen any of them during training. All we’re telling the network is (a) what font it is (b) what character it is. The model has seen other characters of the same font during training, so what it does is to infer from those training examples to the unseen test examples.

I especially like the part where you can see a spectrum of generated fonts through varying parameters.

Visualize This: The FlowingData Guide to Design, Visualization, and Statistics (2nd Edition)

Visualize This: The FlowingData Guide to Design, Visualization, and Statistics (2nd Edition)