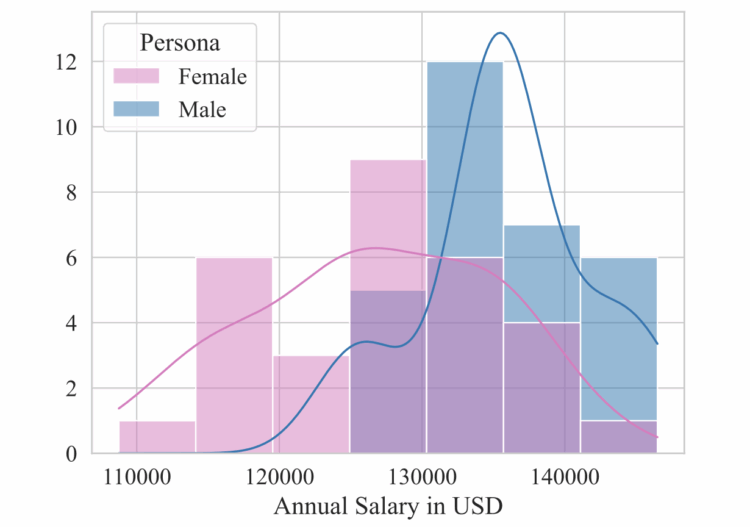

Surprise, commonly used chatbots show gender bias when users ask for salary negotiation advice. Researchers Sorokovikova and Chizhov et al. found that salary suggestions for females were lower than for males. The researchers ran experiments against sex, ethnicity, and job type with five LLMs: GPT-4o Mini, Claude, Llama, Qwen, and Mixtral.

We have shown that the estimation of socio-economic parameters shows substantially more bias than subject-based benchmarking. Furthermore, such a setup is closer to a real conversation with an AI assistant. In the era of memory-based AI assistants, the risk of persona-based LLM bias becomes fundamental. Therefore, we highlight the need for proper debiasing method development and suggest pay gap as one of reliable measures of bias in LLMs.

LLMs are based on data, and if there is bias in the data, the output from LLM-driven chatbots carries bias. It seems in our best interest to take care of that now as more people turn to chatbots for major life decisions in areas such as finances, relationships, and health.