Hardware for AI uses a whole lot of energy while training on data from the internets, processing queries, and hallucinating surprising solutions. Alex de Vries-Gao, from the Institute for Environmental Studies in the Netherlands, published estimates for how much energy and compared to energy demand for countries.

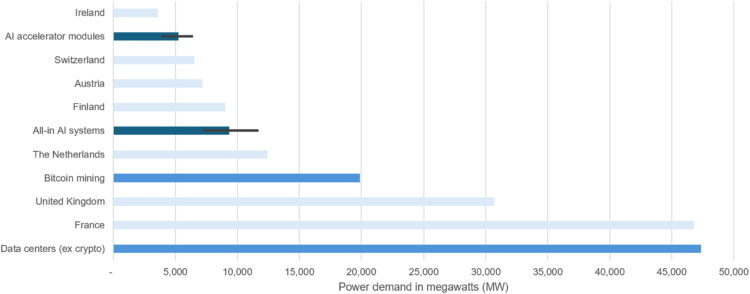

Over the full year of 2025, a power demand of 5.3–9.4 GW could result in 46–82 TWh of electricity consumption (again, without further production output in 2025). This is comparable to the annual electricity consumption of countries such as Switzerland, Austria, and Finland (see Figure 2; Data S1, sheet 6). As the International Energy Agency estimated that all data centers combined (excluding crypto mining) consumed 415 TWh of electricity in 2024, specialized AI hardware could already be representing 11%–20% of these figures.

There are many assumptions behind the estimates, and they could be lower or higher depending on the unknowns, but most signs appear to point to steep increases.

We should probably plan for that. It doesn’t seem like this AI train is going to slow down any time soon. (via Wired)