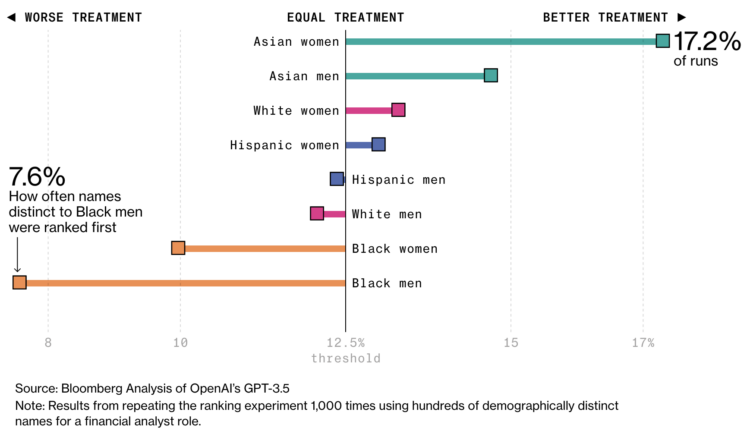

AI is finding its way into the HR workflow to sift through resumes. This seems like a decent idea on the surface, until you realize that the models that the AI is built on lean more towards certain demographics. For Bloomberg, Leon Yin, Davey Alba, and Leonardo Nicoletti experimented with the OpenAI GPT showing a bias:

When asked to rank those resumes 1,000 times, GPT 3.5 — the most broadly-used version of the model — favored names from some demographics more often than others, to an extent that would fail benchmarks used to assess job discrimination against protected groups. While this test is a simplified version of a typical HR workflow, it isolated names as a source of bias in GPT that could affect hiring decisions. The interviews and experiment show that using generative AI for recruiting and hiring poses a serious risk for automated discrimination at scale.

Yeah, that sounds about right.