The unassuming little Descriptive Camera made me rethink data. This project by Matt Richardson was on display at the ITP Spring Show. The basic premise is that you take a photo and the camera spits out a textual description of what it sees. The results are remarkably accurate, detailed, and humorous.

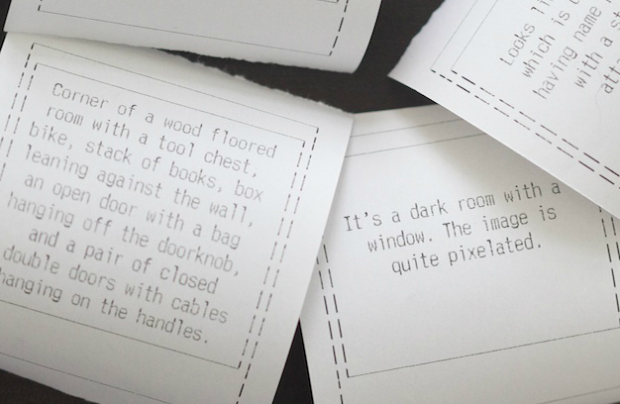

Here’s what my photo said:

A woman wearing a seriously awesome jacket that is printed with yellow, blue, and grey circles looks at her ipad rather than making eye contact with Matt Richardson.

I mean, my jacket *IS* seriously awesome! So it not only described what it saw, but it also has great fashion sense. What a clever programmer you may be thinking.

Ah, but it’s all a ruse. Albeit, a very novel and sly ruse. Matt described being underwhelmed by the EXIF data provided by digital cameras which provides you with things like date, time, camera model, and sometimes geo-spatial info. He wanted to see a world where cameras actually told you about the contents of the photo. Undeterred by the fact that this type of technology isn’t feasible or practical right now, Matt decided to take a more human approach. He uses Amazon’s Mechanical Turk and alternatively, instant messages to his friends, to subvert the computational task of providing a textual description of the photo.

So back to how this made me rethink data. It struck me that sometimes it’s not what’s immediately in front of you. Sometimes it’s the shadow of the thing that’s important; sometimes it’s what envelopes it, or connects it to its surroundings, or maybe even a subjective description of what it is. Sometimes it’s not a jacket… it’s a seriously awesome jacket.

This is novel as Matt Richardson is simply using the concept of an expert system (that would normally be implemented by an AI that would likely fall short in quality of it’s assessment) and replaces it as it were a variable (which it is) with a human powered expert system via Mechanical Turk. Great concept and execution…what is the temporal turnaround on the responses? And does it matter if it works at the speed of a social network!

Mac, from what I witnessed and from his video on his site, it seemed to take under a minute to get the result. Faster than a Polaroid! :)

This prompts interesting thoughts about the relationship of handmade – manual – analog – automated – mechanical – digital. It is not black and white (in process, categorization, text, or image).

That’s not data you’re talking about, though. It’s Data’s dark twin… Lore.

Google Joseph Kosuth, e.g., http://uploads4.wikipaintings.org/images/joseph-kosuth/one-and-three-chairs.jpg

The next step is to feed the photos and descriptions into Skynet… ;)

Think of it also this way: a brief description is all that the visually impaired get in many cases, so it needs to be good. Try visualizing scenes from the description alone, before looking at the picture. What are yellow, blue and grey circles to a person who has never seen color?

Great comments Nathan, and an interesting subject. I agree that context is important to the meaning and interpretation of data, and that the observer’s sentiments can influence the data, provide a new perspective and more importantly depth of information.

It might be worth mentioning that something like this service exists currently as an iPhone app for blind users: VizWiz. A blind person takes a picture of something and ask sighted users about what is in the photo. These contributors can respond and describe the photo, thus transforming visual information into audio. App store link: http://itunes.apple.com/us/app/vizwiz/id439686043?mt=8