Science is hard. Statistics is hard. Proving cause and effect is hard. Christie Aschwanden for FiveThirtyEight, with graphics by Ritchie King, discusses the uncertainty in data and the challenge of answering seemingly straightforward questions via the scientific method.

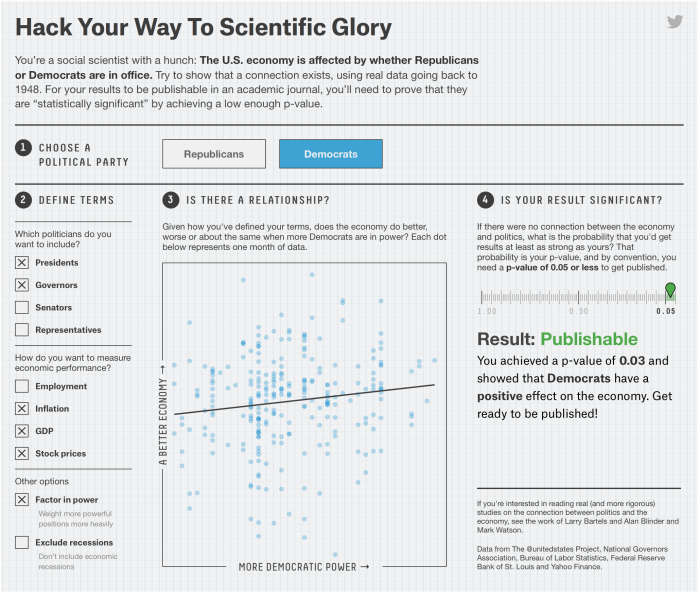

Leading the article is a description of p-hacking. Mess around with variables enough, and you too can get a p-value low enough to publish results in a distinguished journal.

A fine interactive lets you try this yourself, showing that the political party in office affects the economy. The funny part is that you can easily “prove” that both parties are good for the economy.

Which political party is best for the economy seems like a pretty straightforward question. But as you saw, it’s much easier to get a result than it is to get an answer. The variables in the data sets you used to test your hypothesis had 1,800 possible combinations. Of these, 1,078 yielded a publishable p-value, but that doesn’t mean they showed that which party was in office had a strong effect on the economy. Most of them didn’t.

The p-value reveals almost nothing about the strength of the evidence, yet a p-value of 0.05 has become the ticket to get into many journals. “The dominant method used [to evaluate evidence] is the p-value,” said Michael Evans, a statistician at the University of Toronto, “and the p-value is well known not to work very well.”

I guess that means we have to think more like a statistician and less like a brainless, hypothesis-testing robot.

Visualize This: The FlowingData Guide to Design, Visualization, and Statistics (2nd Edition)

Visualize This: The FlowingData Guide to Design, Visualization, and Statistics (2nd Edition)