For the Washington Post, Drew Harwell reports on the budding industry of AI-generated videos that make no sense but people watch and enjoy anyway.

Their power has spawned a wild cottage industry of AI-video makers, enticed by the possibility of infinite creation for minimal work. Adele, a 20-year-old student in Florida who spoke on the condition that only her first name be used because she fears harassment, told The Washington Post she is taking a break from college to focus on making money from her AI-video accounts. Another creator in Arizona who went viral with an AI airport kangaroo said he made $15,000 in commissions in three months, speaking on the condition of anonymity out of concern over online harassment.

But the flood of financially incentivized “slop” has also given way to a strange new internet, where social media feeds overflow with unsettlingly lifelike imagery and even real videos can appear suspect. Some viral clips now barely rely on humans at all, with AI tools generating not just the imagery but also the ideas.

The novelty will wear off but generative text-to-video tools will also improve in speed and quality. There are curious times ahead.

My young son likes to draw, animate, write stories, and stitch together videos. He likes to make. I tell him to use manual tools so that he knows the process and can develop his imagination. But as things are now, I wonder if and when it’ll be time to introduce other tools.

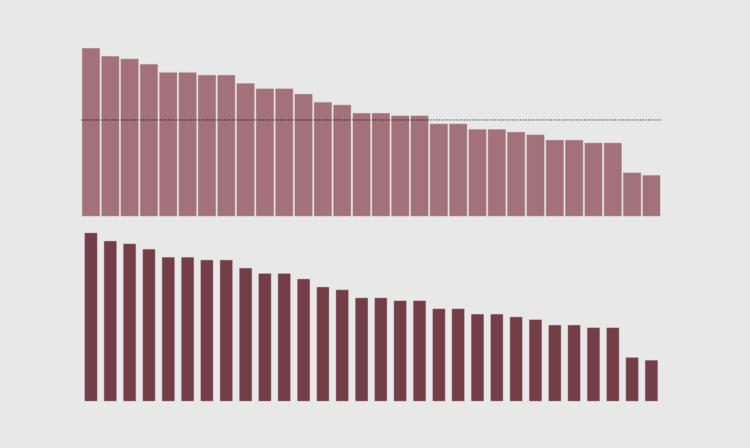

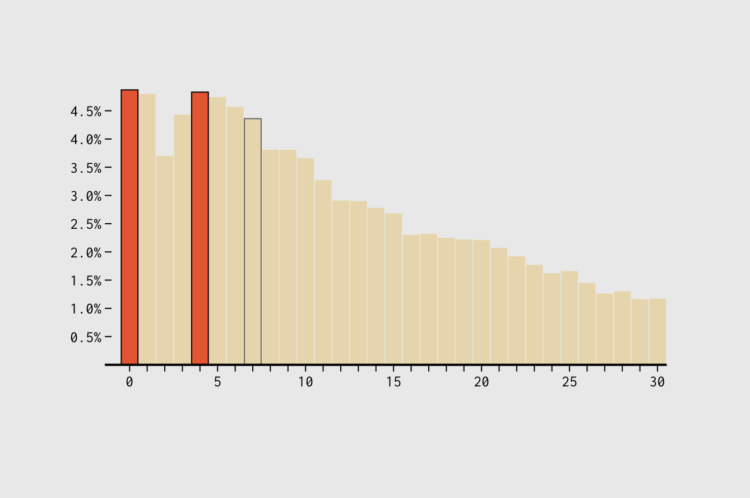

Visualize This: The FlowingData Guide to Design, Visualization, and Statistics (2nd Edition)

Visualize This: The FlowingData Guide to Design, Visualization, and Statistics (2nd Edition)