Alec Wilkinson, reporting for The New Yorker, profiled Thomas Hargrove, who is deep…

Statistics

More than mean, median, and mode.

-

Serial-Killer detector

-

Flawed hate crime data collection

Data can provide you with important information, but when the collection process is…

-

800 pages of Tinder data

Judith Duportail, writing for the Guardian, requested her personal data from dating service…

-

Uber got hacked and then paid the hackers $100k to not tell anyone

This is fine. Totally normal. Eric Newcomer reporting for Bloomberg:

Hackers stole the… -

Facebook still allowed race exclusion for housing advertisers

Last year, ProPublica revealed that Facebook allowed housing advertisers to exclude races in…

-

Google collected Android users’ location without permission

Keith Collins reporting for Quartz:

Since the beginning of 2017, Android phones have… -

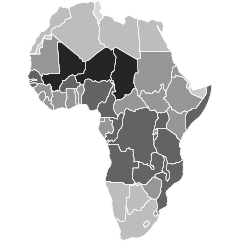

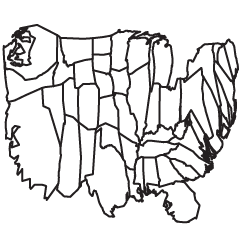

Troubling pick for Census Bureau deputy director

The administration’s current pick for deputy director of the United States Census Bureau…

-

Based on your morals, a debate with a computer to expose you to other points of view

Collective Debate from the MIT Media Lab gauges your moral compass with a…

-

Changing internet markets for sex work

The internet changed how sex workers and clients find each other and how…

-

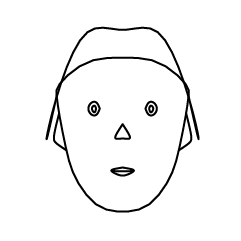

AI-generated celebrity faces look real

Researchers from NVIDIA published work with artificial intelligence algorithms, or more specifically, generative…

-

Data journalism lessons available from ProPublica Data Institute

ProPublica runs a small annual workshop to teach journalists a bit about data…

-

Dangers of CSV injection

George Mauer highlights how a hacker might access other people’s data by putting…

-

Machine learning demo with your webcam and GIFs

The Teachable Machine from Støj, Use All Five, and Google is a fun…

-

Stack Overflow salary calculator for developers

Stack Overflow used data from their developer survey to build a prediction model…

-

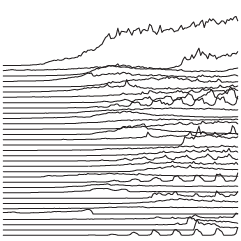

Looking for improbably frequent lottery winners

After hearing the story of reporter Lawrence Mower, who discovered fraudsters after a…

-

LEGO color scheme classifications

Nathanael Aff poked at LEGO brickset data with some text mining methods in…

-

xkcd: Ensemble model

That xkcd is such a joker. Munroe should start a comic.…

-

Q&A with This is Statistics

I did a quick Q&A with This is Statistics recently. It’s an ongoing…

-

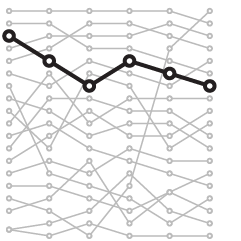

Machine learning to find spy planes

Last year, BuzzFeed News went looking for surveillance flight paths from the FBI…

-

Calculating the opposite of your job

Here’s a fun calculation from The Upshot.

The Labor Department keeps detailed and…

Visualize This: The FlowingData Guide to Design, Visualization, and Statistics

Visualize This: The FlowingData Guide to Design, Visualization, and Statistics